As we are an active part of Bigdata ecosystems, where our day to day lifestyle and activities are responsible for data generation, and systems around us can collect the data, analyse it and consume it for their business to help our lifestyle. Nowadays world gets too much interconnected because of internet and mobile devices as never been in history, each day we are creating about 2.5 quintillion( 2.5×1018) of data, its huge amount created by different verticals in the industry, This verticals using this massive amount of information to rise above the business cloud. But before using this such huge amount of information industry must aware of the real time business scenarios, in short 'Usecases' to implement the solution for analysis of Bigdata.

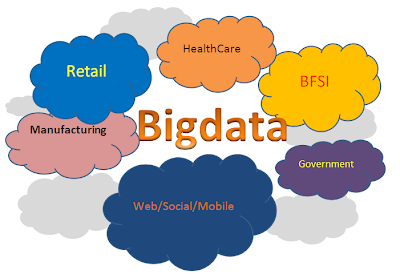

We'll focus on some industry key verticals/domains which are using or most likely to use Bigdata analysis. Below are the some Bigdata value creation opportunities.

Financial Services:

-Fraud Detect

-Model and manage risk

-Improve debt recovery rates

-Personalized banking and insurance products

-Recommendation of banking products

Retail and Consumer Packaged Goods Industry:

-Customer Care Call Centers

-Customer Sentiment Analysis

-Campaign management and customer loyalty programs

-Supply Chain Management and Logistics

-Window Shoppers

-Location based Marketing

-Predicting Purchases and Recommendations

Manufacturing Industry:

-Design to value

-Consumer Sentiment Analysis

-Crowd-sourcing

-Supply Chain Management and Logistic

-Preventive Maintenance and Repairs

-Digital factory for lean manufacturing

-Improve service via product sensor data

Healthcare:

-Optimal treatment pathways

-Remote patient monitoring

-Predictive modeling for new drugs

-Personalized medicine

-Patient behavior and sentiment data

-Pharmaceutical R&D data

Web/Social/Mobile Industry:

-Location based marketing

-Social segmentation

-Sentiment analysis

-Price comparison services

-Recommendation engines

-Advertisements/promotions and Web Campaigns

Govenrment

-Reduce fraud

-Segment population, customize action

-Support open data initiatives

-Automate decision making

-Election Campaigns

Data growth in each section of each vertical is viral, speed of data generation is tremendous so needed a Bigdata capability for addressing such business problems, get ready soon and make your business to capable to hit big elephant of information.